# SMART-101 Dataset Release

## Overview

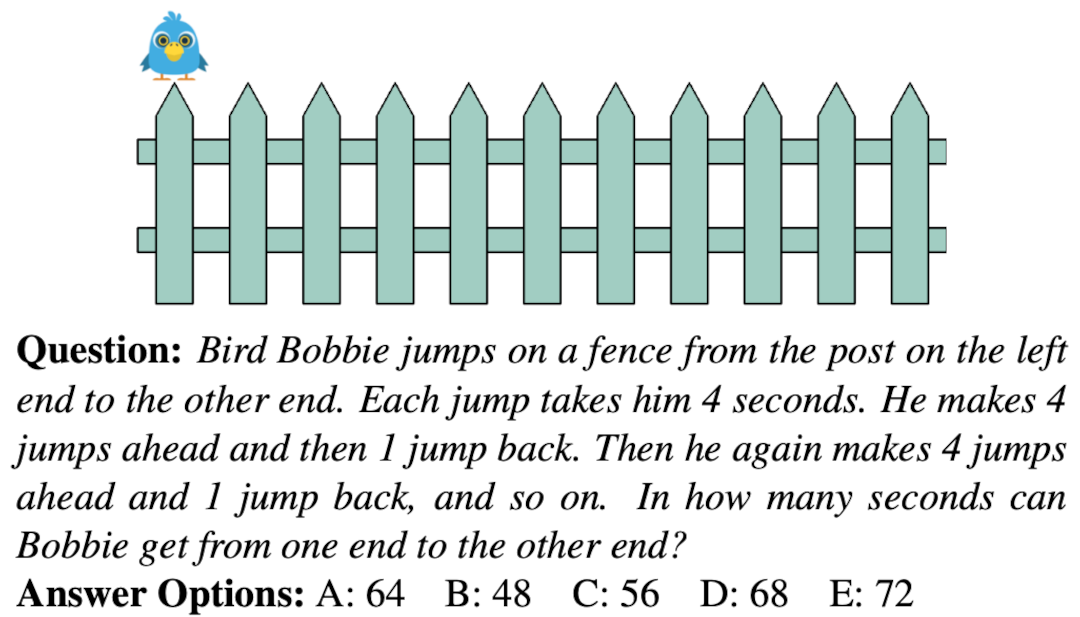

Recent times have witnessed an increasing number of applications of deep neural networks towards solving tasks that require superior cognitive abilities, e.g., playing Go, generating art, etc. Such a dramatic progress raises the question: how generalizable are neural networks in solving problems that demand broad skills? To answer this question, we propose SMART: a Simple Multimodal Algorithmic Reasoning Task (and the associated SMART-101 dataset) for evaluating the abstraction, deduction, and generalization abilities of neural networks in solving visuo-linguistic puzzles designed specifically for children of younger age (6--8). Our dataset consists of 101 unique puzzles; each puzzle comprises a picture and a question (see above), and their solution needs a mix of several elementary skills, including pattern recognition, algebra, and spatial reasoning, among others. To train deep neural networks, we programmatically augment each puzzle to 2,000 new instances; each instance varied in appearance, text, and its solution. In this release, we make public our programmatically generated SMART-101 dataset for each puzzle and their solutions.

## Dataset Organization

The dataset consists of `101` folders (numbered from 1-101); each folder corresponds to one distinct puzzle (root puzzle). There are 2000 puzzle instances programmatically created for each root puzzle, numbered from 1-2000. Every root puzzle index (in [1,101]) folder contains: (i) `img/` and (ii) `puzzle_.csv`. The folder `img/` is the location where the puzzle instance images are stored, and `puzzle_.csv` the non-image part of a puzzle. Specifically, a row of `puzzle_.csv` is the following tuple: ``, where `id` is the puzzle instance id (in [1,2000]), `Question` is the puzzle question associated with the instance, `image` is the name of the image (in `img/` folder) corresponding to this instance `id`, `A, B, C, D, E` are the five answer candidates, and `Answer` is the answer to the question.

## Dataset Splits

In our paper Are Deep Neural Networks SMARTer than Second Graders?, we provide four different dataset splits for evaluation, namely (i) Instance Split (IS), (ii) Answer Split (AS), (iii) Puzzle Split (PS), and (iv) Fewshot Split (FS). Below, we provide the details of each split to make fair comparisons to the results in our paper.

### Puzzle Split (PS)

We use the following root puzzle ids as the `Val` and `Test` sets.

| Split | Root Puzzle Id Sets |

| -------- | ------- |

| `Val` | {94, 95, 96} |

| `Test` | {61, 62, 66, 67, 69, 70, 71, 72, 73, 74, 75, 76, 97, 98, 99} |

| `Train`| {1,2,...,101} \ (Val $\cup$ Test) |

The evaluation is done on the `Test` puzzles with instance indices 1701-2000.

### Few-shot Split (FS)

We randomly include `fsK` number of instances from the `Val` and `Test` sets used for PS while training in FS. In the paper, we used `fsK`$\in$`{10,100}`. These few-shot samples are taken from instance indices 1-1600 of the respective puzzles and the evaluation is on instance ids from 1701-2000.

### Instance Split (IS)

We split the instances under every root puzzle as: Train = 1-1600, Val = 1601-1700, Test = 1701-2000. We train the neural network backbone models using the `Train` split puzzle instances from all root puzzles together, and evaluate on the `Test` split.

### Answer Split (AS)

We find the median answer value among all the 2000 instances for every root puzzle and only use this set of the respective instances (with the median answer value) as the `Test` set for evaluation.

## Contact

Anoop Cherian (cherian@merl.com), Kuan-Chuan Peng (kpeng@merl.com), or Suhas Lohit (slohit@merl.com).

## Citation

```

@article{cherian2022deep,

title={Are Deep Neural Networks SMARTer than Second Graders?},

author={Cherian, Anoop and Peng, Kuan-Chuan and Lohit, Suhas and Smith, Kevin and Tenenbaum, Joshua B},

journal={arXiv preprint arXiv:2212.09993},

year={2022}

}

```

## Copyright and Licenses

The SMART-101 dataset is released under `CC-BY-SA-4.0` license as found in the [LICENSE.txt](LICENSE.txt) file.

All data:

```

Created by Mitsubishi Electric Research Laboratories (MERL), 2022-2023

SPDX-License-Identifier: CC-BY-SA-4.0

```